지역센타회원 | My Life, My Job, My Career: How 5 Simple Deepseek Helped Me Succeed

아이디

패스워드

회사명

담당자번호

업태

종류

주소

전화번호

휴대폰

FAX

홈페이지 주소

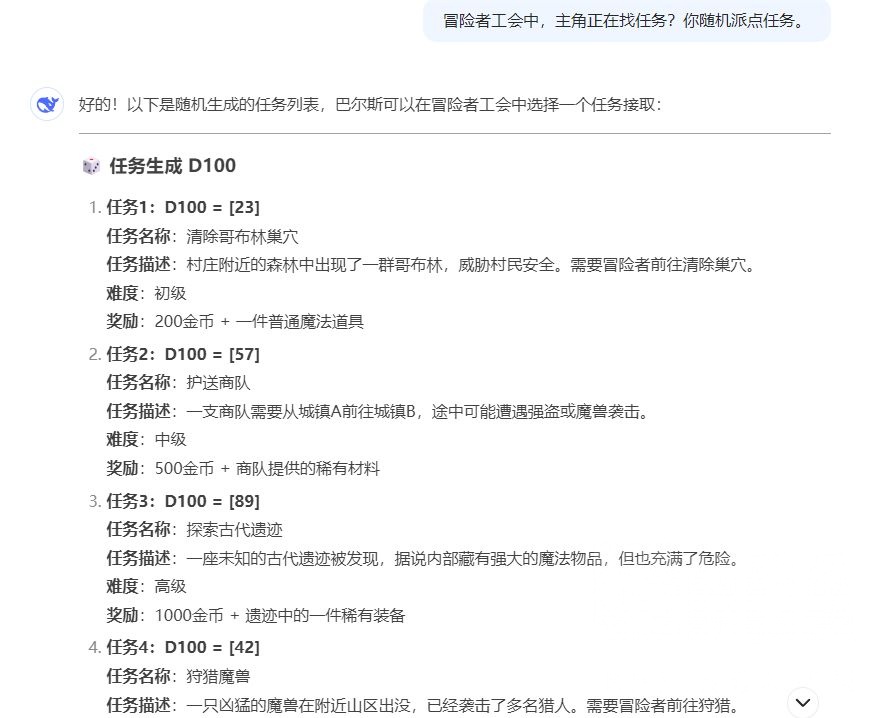

DeepSeek provides AI of comparable quality to ChatGPT but is totally free to use in chatbot type. A year-old startup out of China is taking the AI business by storm after releasing a chatbot which rivals the efficiency of ChatGPT while using a fraction of the ability, cooling, and coaching expense of what OpenAI, Google, and Anthropic’s techniques demand. Staying in the US versus taking a trip back to China and joining some startup that’s raised $500 million or no matter, ends up being one other issue where the highest engineers actually find yourself eager to spend their skilled careers. But final night’s dream had been totally different - quite than being the participant, he had been a bit. Why this matters - where e/acc and true accelerationism differ: e/accs think humans have a vibrant future and are principal agents in it - and something that stands in the best way of humans utilizing know-how is bad. Why this matters - a number of notions of management in AI policy get more durable when you want fewer than 1,000,000 samples to convert any mannequin into a ‘thinker’: Probably the most underhyped part of this release is the demonstration that you could take fashions not trained in any form of main RL paradigm (e.g, Llama-70b) and convert them into powerful reasoning fashions using simply 800k samples from a strong reasoner.

But I'd say each of them have their very own declare as to open-source fashions which have stood the check of time, a minimum of on this very short AI cycle that everyone else exterior of China is still utilizing. Researchers with Align to Innovate, the Francis Crick Institute, Future House, and the University of Oxford have built a dataset to test how nicely language models can write biological protocols - "accurate step-by-step instructions on how to complete an experiment to perform a particular goal". Take heed to this story a company based mostly in China which aims to "unravel the mystery of AGI with curiosity has released DeepSeek LLM, a 67 billion parameter mannequin skilled meticulously from scratch on a dataset consisting of two trillion tokens. To practice one among its more moderen fashions, the company was compelled to make use of Nvidia H800 chips, a less-highly effective version of a chip, the H100, accessible to U.S.

But I'd say each of them have their very own declare as to open-source fashions which have stood the check of time, a minimum of on this very short AI cycle that everyone else exterior of China is still utilizing. Researchers with Align to Innovate, the Francis Crick Institute, Future House, and the University of Oxford have built a dataset to test how nicely language models can write biological protocols - "accurate step-by-step instructions on how to complete an experiment to perform a particular goal". Take heed to this story a company based mostly in China which aims to "unravel the mystery of AGI with curiosity has released DeepSeek LLM, a 67 billion parameter mannequin skilled meticulously from scratch on a dataset consisting of two trillion tokens. To practice one among its more moderen fashions, the company was compelled to make use of Nvidia H800 chips, a less-highly effective version of a chip, the H100, accessible to U.S.

But you had more combined success relating to stuff like jet engines and aerospace where there’s loads of tacit information in there and building out everything that goes into manufacturing something that’s as nice-tuned as a jet engine. And if by 2025/2026, Huawei hasn’t gotten its act together and there just aren’t lots of high-of-the-line AI accelerators so that you can play with if you're employed at Baidu or Tencent, then there’s a relative commerce-off. Jordan Schneider: Well, what is the rationale for a Mistral or a Meta to spend, I don’t know, a hundred billion dollars training one thing after which simply put it out for free? Usually, in the olden days, the pitch for Chinese models would be, "It does Chinese and English." And then that could be the primary source of differentiation. Alessio Fanelli: I was going to say, free Deepseek, s.id, Jordan, another method to think about it, just when it comes to open supply and never as similar yet to the AI world where some nations, and even China in a manner, have been possibly our place is not to be at the cutting edge of this. In a way, you'll be able to start to see the open-supply models as free-tier advertising for the closed-source versions of these open-supply models.

When you have any kind of inquiries relating to exactly where and tips on how to use deepseek ai, you are able to email us at the internet site.